What if your brain could talk to you?

’That’s a silly question’, I hear you say, ‘My brain already talks to me.’

To the best of our current knowledge, the mind is the brain, and the mind is always talking. Indeed, it’s where all the talking gets started. We have voices in our heads — a cacophony of different thoughts, interests, fears, and hopes— vying for attention. We live in a stream of self-talk. We build up detailed narratives about our lives. We are always spinning yarns, telling stories.

This is all probably true. But our brains don’t tell us everything. The stream of self-talk in which we are situated (or should that be ‘by which we are constituted’?) sits atop a vast, churning sea of sub-conscious neurological activity. We operate on a ‘need to know’ basis and we don’t need to know an awful lot. Many times we sail through this sea of activity unperturbed. But sometimes we don’t. Sometimes what is happening beneath the surface is deeply problematic, hurtful to ourselves and to others, and occasionally catastrophic. Sometimes our brains only send us warning signals when we are about to get washed up on the rocks.

Take epilepsy as an example. The brains of those who suffer from epilepsy occasionally enter into cycles of excessive synchronous neuronal activity. This results in seizures (sometimes referred to as ‘fits’), which can lead to blackouts and severe convulsions. Sometimes these seizures are preceded by warning signs (e.g. visual auras), but many times they are not, and even when they are, the signs often come too late in the day, well after anything can be done to avert their negative consequences. What if the brains of epileptics could tell them something in advance? What if certain patterns of neuronal activity were predictive of the likelihood of a seizure and what if this information could be provided to epileptic patients in time for them to avert a seizure?

That’s the promise of a new breed of predictive brain implants. These are devices (sets of electrodes) that are implanted into the brains of epileptics and, through statistical learning algorithms, used to predict the likelihood of seizures from patterns of neuronal activity. These devices are already being trialled on epileptic patients and proving successful. Some people are enthusiastic about their potential to help those who suffer from the negative effects of this condition and, as you might expect, there is much speculation about other use cases for this technology. For example, could predictive brain implants tell whether someone is going to go into a violent rage? Could this knowledge prove useful in crime prevention and mitigation?

These are important questions, but before we get too carried away with the technical possibilities (or impossibilities) it’s worth asking some general conceptual and ethical questions. Using predictive brain implants to control and regulate behaviour might seem a little ‘Clockwork Orange’-y at a first glance. Is this technology going to be great boon to individual liberty, freeing us from the shackles of unwanted neural activity? Or is it going to be a technique of mind control - the ultimate infringement of human autonomy? These are some of the questions taken up in Frederic Gilbert’s paper ‘A Threat to Autonomy? The Intrusion of Predictive Brain Implants’. I want to offer some of my own thoughts on the issue in the remainder of this post.

1. The Three Types of Predictive Brain Implants

Let’s start by clarifying the technology of interest. Brain implants of one sort of another have been around for quite some time. So-called ‘deep brain stimulators’ have been used to treat patients with neurological and psychiatric conditions for a couple of decades. The most common use is for patients with Parkinson’s disease, who are often given brain implants that help to minimise or eliminate the tremors associated with their disease. It is thought that over 100,000 patients worldwide have been implanted with this technology.

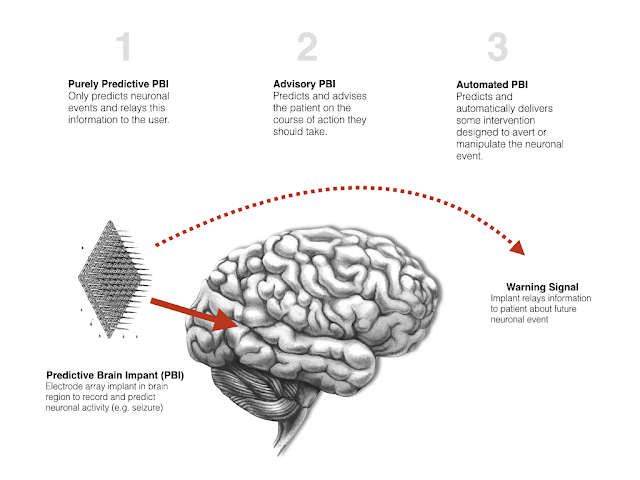

Predictive brain implants (PBIs) are simply variations on this technology. Electrodes are implanted in the brains of patients. These electrodes record and analyse the electrical signals generated by the brain. They then use this data to learn and predict when a neuronal event (such as a seizure) is going to take place. At the moment, the technology is its infancy, essentially just providing patients with warning signals, but we can easily imagine developments in the technology, perhaps achieved by combining it with other technologies. Gilbert suggests that there are three possible forms for predictive brain implants:

Purely Predictive: These are PBIs that simply provide patients with predictive information about future neuronal events. Given the kinds of events that are likely to be targets for PBIs, this information will probably always have a ‘warning signal’-like quality.

Advisory: These are PBIs that provide predictions about future neuronal events, as well as advice to patients about how to avert/manipulate those neuronal events. For example, in the case of epilepsy, a patient could be advised to take a particular medication or engage in some preventive behaviour. The type of advice that could be given could be quite elaborate, if the PBI is combined with other information processing technologies.

Automated: These are PBIs that predict neuronal events and then deliver some treatment/intervention that will avert or manipulate that event. They will do this without first warning or seeking the patient’s consent. This might sound strange, but it is not that strange. There are a number of automated-treatment devices in existence already, such as heart pacemakers or insulin pumps, and they regulate biochemical processes without any meaningful ongoing input from the patient.

The boundary between the first two categories is quite blurry. Given that PBIs necessarily select specific neuronal events from the whirlwind of ongoing neuronal events for prediction, and given that they will probably feed this selective information to patients in the form of warning signals, the predictions are likely to carry some implicit advice. Nevertheless, the type of advice provided by advisory PBIs could, as mentioned above, be more or less elaborate. It could range from the very general ‘Warning: you ought to do something to avert a seizure’ to the more specific ‘warning: you ought to take medication X, which can be purchased at store Y, which is five minutes from your present location’.

The different types of PBI could have very different impacts on personal autonomy. At a first glance, it seems like an automated PBI would put more pressure on individual autonomy than a purely predictive PBI. Indeed, it seems like a purely predictive or advisory PBI could actually benefit autonomy, but that first glance might be misleading. We need a more precise characterisation of autonomy, and a more detailed analysis of the different ways in which a PBI could impact upon autonomy, before we can reach any firm conclusions.

2. The Nature of Autonomy

Many books and articles have been written on the concept of ‘autonomy’. Generations of philosophers have painstakingly identified necessary and sufficient conditions for its attainment, subjected those conditions to revision and critique, scrapped their original accounts, started again, given up and argued that the concept is devoid of meaning, and so on. I cannot hope to do justice to the richness of the literature on this topic here. Still, it’s important to have at least a rough and ready conception of what autonomy is and the most general (and hopefully least contentious) conditions needed for its attainment.

I have said this before, but I like Joseph Raz’s general account. Like most people, he thinks that an autonomous agent is one who is, in some meaningful sense, the author of their own lives. In order for this to happen, he says that three conditions must be met:

Rationality condition: The agent must have goals/ends and must be able to use their reason to plan the means to achieve those goals/ends.

Optionality condition: The agent must have an adequate range of options from which to choose their goals and their means.

Independence condition: The agent must be free from external coercion and manipulation when choosing and exercising their rationality.

I have mentioned before that you can view these as ‘threshold conditions’, i.e. conditions that simply have to be met in order for an agent to be autonomous, or you can have a slightly more complex view, taking them to define a three dimensional space in which autonomy resides. In other words, you can argue that an agent can have more or less rationality, more or less optionality, and more or less independence. The conditions are satisfied in degrees. This means that agents can be more or less autonomous, and the same overall level of autonomy can be achieved through different combinations of the relevant degrees of satisfaction of the conditions. That’s the view I tend to favour. I think there possibly is a minimum threshold for each condition that must be satisfied in order for an agent to count as autonomous, but I suspect that the cases in which this threshold is not met are pretty stark. The more complicated cases, and the ones that really keep us up at night, arise when someone scores high on one of the conditions but low on another. Are they autonomous or not? There may not be a simple ‘yes’ or ‘no’ answer to that question.

Anyway, using the three conditions we can formulate the following ‘autonomy principle’ or ‘autonomy test’:

Autonomy principle: An agent’s actions are more or less autonomous to the extent that they meet the (i) rationality condition; (ii) optionality condition and (iii) independence condition.

We can then use this principle to determine whether, and if, PBIs interfere with or undermine an agent’s autonomy.

What would such an analysis reveal? Well, looking first to the rationality condition, it is difficult to see how a PBI could undermine this. Unless they malfunction or are misdirected, it is unlikely that a PBI would undermine our capacity for rational thought. Indeed, the contrary would seem to be the case. You could argue that a condition such as epilepsy is a disruption of rationality. Someone in the grip of a seizure is no longer capable of rational thought. Consequently, using the PBI to avert or prevent their seizure might actually increase, not decrease their rationality.

Turning to the other two conditions, things become a little more unclear. The extent to which autonomy is enhanced or undermined depends on the type of PBI being used.

3. Do advisory PBIs support or undermine autonomy?

Let’s start by looking at predictive/advisory PBIs. I’ll treat these as a pair since, as I stated earlier on, a purely predictive PBI probably does carry some implicit advice. That said, the advice would be different in character. The purely predictive PBI will provide a vague, implied piece of advice (“do something to stop x”). The advisory PBI could provide very detailed, precise advice, perhaps based on the latest medical evidence (“take medication x in ten minutes time and purchase it from vendor y”). Does this difference in detail and specification matter? Does it undermine or promote autonomy?

Consider this first in light of the optionality condition. On the one hand, you could argue that a vague and general bit of advice is better because it keeps more options open. It advises you to do something, but leaves it up to you exactly what that is. The more specific advice seems to narrow the range of choices, and this may seem to reduce the degree of optionality. That said, the effect here is probably quite slight. The more specific advice is not compelled or forced upon you (more on this in a moment), so you are arguably left in pretty much the same position as someone getting the more general advice, albeit with a little more knowledge. Furthermore, there is the widely-discussed ‘paradox of choice’ which suggests that having too many options can be a bad thing for autonomy because it leaves you paralysed in your decisions. Having your PBI specify an option might help you to break that paralysis. That said, this paradox of choice may not arise in the kinds of scenarios in which PBIs get deployed. The paradox of choice is best documented in relation to consumer behaviours and its not clear how similar this would be to decisions about which intervention to pick to avoid a neuronal event.

The independence condition is possibly more important. At a first glance, it seems pretty obvious that an advisory PBI does not undermine the independence condition. For one thing, the net effect of a PBI may be to increase your overall level of independence because it will make you less reliant on others to help you out and monitor your well-being. This is one thing Gilbert discusses in his paper on epileptic patients. He was actually involved with one of the first experimental trials of PBIs and interviewed some of patients who received them. One of the patients on the trial reported feeling an increased level of independence after getting the implant:

…the patient reported: “My family and I felt more at ease when I was out in the community [by myself], […] I didn’t need to rely on my family so much.” These descriptions are rather clear: with sustained surveillance by the implanted device, the patient experienced novel levels of independence and autonomy.

(Gilbert 2015, 7)

In addition to that, the advisory PBI is merely providing you with suggestions: it does not force them upon you. You are not compelled to take the medication or follow the prescribed steps. This doesn’t involve manipulation or coercion in the sense usually discussed by philosophers of autonomy.

So things look pretty good for advisory PBIs on the independence front, right? Well, not so fast. There are three issues to bear in mind.

First, although the advice provided by the PBI may not be coercive right now, it could end up having a coercive quality. For example, it could be that following the advice provided by the PBI is a condition of health insurance: if you don’t follow the advice, you won’t be covered by your health insurance policy. That might lend a coercive air to the phenomenon.

Second, people may end up being pretty dependent on the PBI. People might not be inclined to second guess or question the advice provided, and may always go along with what it says. This might make them less resilient and less able to fend for themselves, which would undermine independence. We already encounter this phenomenon, of course. Many of us are already dependent on the advice provided to us by services like Google Maps. I don’t know you feel about that dependency. It doesn’t bother me most of the time, though there have been occasions on which I have lamented my overreliance on the technology. So if you think that dependency on Google Maps undermines autonomy, then you might think the same of an advisory PBI (and vice versa).

Third, and finally, the impact of an advisory PBI on independence, specifically, and autonomy, more generally, probably depends to a large extent the type of neuronal event it is being used to predict and manipulate. An epileptic on the cusp of a seizure is already in a state of severely compromised autonomy. They have limited options and limited independence in any event. The advisory PBI might impact negatively on those variables in moments just prior to the predicted seizure, but the net effect of following the advice (i.e. possibly avoiding the seizure) probably compensates for those momentary negative impacts. Things might be very different if the PBI was being used to predict whether you were about to go into a violent rage or engage in some other immoral behaviour. We don’t usually think of violence or immorality as diseases of autonomy so there may be no equivalent compensating effect. In other words, the negative impact on autonomy might be greater in these use-cases.

4. Do automated PBIs support or undermine autonomy?

Let’s turn finally to the impact of automated PBIs on autonomy. Recall, these are PBIs that predict neuronal events and use this information to automatically deliver some intervention to the patient that averts or otherwise manipulates those neuronal events. This means that the decisions made on foot of the prediction are not mediated through the patient’s conscious reasoning faculties; they are dictated by the machine itself (by its code/software). The patient might be informed of the decisions at some point, but this has no immediate impact on how those decisions get made.

This use of PBIs seems to be much more compromising of individual autonomy. After all, the automated PBI does not treat the patient as someone who’s input is relevant to ongoing decisions about medical treatment. The patient is definitely not given any options and they not even respected as independent autonomous agents. Consequently, the negative impact on autonomy seems clear.

But we have to be careful here. It is true that the patient with the automated PBI does not exercise any control over their treatment at the time that the treatment is delivered, but this is not to say they exercise no control at all. Presumably, the patient originally consented to having the PBI implanted in their brains. At that point in time, they were given options and were treated as independent autonomous agents. Furthermore, they may retain control over how the device works in the future. The type of treatment automatically delivered by the PBI could be reviewed over time, by the patient, in consultation with their medical team. During those reviews, the patient could once again exercise their autonomy over the device. You could, thus, view the use of the automated PBI as akin to a commitment contract or Ulysses contract. The patient is autonomously consenting to the use of the device as a way of increasing their level of autonomous control at all points in their lives. This may mean losing autonomy over certain discrete decisions, but gaining it in the long run.

Again, the type of neuronal event that the PBI is used to avert or manipulate would also seem crucial here. If it is a neuronal event that otherwise tends to compromise or undermine autonomy, then it seems very plausible to argue that use of the automated PBI does not undermine or compromise autonomy. After all, we don’t think that the diabetic has compromised their autonomy by using an automated insulin pump. But if it is a neuronal event that is associated with immorality and vice, we might feel rather different.

I should add that all of this assumes that PBIs will be used on a consent-basis. If we start compelling certain people to use them, the analysis becomes more complex. The burgeoning literature on neurointerventions in the criminal law would be useful for those who wish to pursue those issues.

5. Conclusion

That brings us to the end. In keeping with my earlier comments about the complex nature of autonomy, you’ll notice that I haven’t reached any firm conclusions about whether PBIs undermine or support autonomy. What I have said is that ‘it depends’. But I think I have gone beyond a mere platitude and argued that it depends on at least three things: (i) the modality of the PBI (general advisory, specific advisory or automated); (ii) the impact on the different autonomy conditions (rationality, optionality, independence) and (iii) the neuronal events being predicted/manipulated.